How do you decide if the risk of a security feature is worth it? If the cure can be as bad as the illness how do you decide? We have opinions!

To agent or not agent, the CrowdStrike question

Some thoughts on the CrowdStrike events at a broad level. We don’t provide the type of capabilities they do, so I don’t feel comfortable inveighing on what happened and how it happened. But, we do have some thoughts on when to take the risk of deploying agents into your systems.

At Cohesive we have a standard response to customers wanting to install <fill in the blank agent> into our VNS3 Network and Security platform virtual appliances. It is always a difficult situation as it is being pushed by the security team and CISO, at what tends to be our larger, more revenue significant customers. It is difficult because our answer is a polite, yet vehement “no”.

The VNS3 control plane should only be open to an allow-listed operations ingress address. The data plane should be open to the ports and services you use our platform for. (We are often a cloud edge firewall, people vpn, data center vpn, waf, and more.) The biggest liability to the security and operational integrity of our devices would be to add an agent that can be exploited or can itself cause an outage. This has happened in the industry (but not to us) numerous times over the recent years via things like SolarWinds, Microsoft OMS agent, and now CrowdStrike to name a few.

One of the oft-used phrases at Cohesive is “horses for courses” and we use that British idiom as part of our approach to making architectural and deployment security recommendations. With respect to the panoply of agents that vendors and security teams alike argue to be installed in all your systems, we think it is quite apropos. We think you need to look at what the purpose and placement of the computing devices is in order to make this decision.

If it is a computer used by a person, especially hybrid use or remote use, how are are you going to protect it? For the most part you can’t protect the network it is on, since it is running on networks you don’t control.

There are some options outside of agenting, for example if using Windows operating system you could use group policy to force the machine onto a virtual network with no ability to access the Internet other than through your VPN’s edge, but it is bad user experience and expensive to have acceptable points of presence.

And even if forcing users onto a VPN, what about email phishing links and attachments, what about Microsoft Office macro exploits that leak into your shared drives? Super tough, and I am glad we aren’t in that business.

People make mistakes. Even well-trained, diligent people can make mistakes in where they browse to or what they click on, and you need to be able to address possible exploits there at the point of attack. So I can’t really blame someone for wanting to believe these types of products are the answer.

At Cohesive which admittedly is not a huge company, our answer has evolved from ‘Microsoft Office is disallowed’, to now ‘Windows is not allowed’. Right there the “people attack surface” is minimized. However, that is a long migration or a “never migration” for many companies. In these cases, I think “agent away” and be judicious in the vendor you choose.

What about business servers, the computers that are not the personal device of an end-user, but rather are there to perform a function like database or API serving or message queuing? Again, for Cohesive, easier in that Windows is not allowed, business services are built on Linux. That said, whether Windows or Linux, in these scenarios why are you ‘protecting’ the computer instead of the network? Those business servers are not going to click on links, browse bad websites, or download malware. They are automatons performing specific, repetitive operations.

This is where we are skeptical of the agent approach and will not allow it for our network appliances, and as applicable would not allow for business services. (CrowdStrike apparently created a similar issue previously on some Linux distributions: https://www.theregister.com/2024/07/21/crowdstrike_linux_crashes_restoration_tools)

In these cases the VNS3 Network Platform (or similar vendor offerings) is there to protect and monitor ingress and egress to the network, along with other elements of what should be thought of as a security lattice. Using cloud or data center virtualization, you have your cloud network security groups and network ACLs, along with your OS firewall, both of which should be working in tandem with network appliances like VNS3. You can then run WAF or IPS at these ingress/egress points as well – run them inside VNS3 or run them via an appropriate cloud service offering.

We think these are the rules to follow:

- Computer primarily used by human and it has to be Windows, agent it and anti-virus it.

- Computer primarily used by human and is Mac or Linux, anti-virus it.

- Computer used by computers, both micro-segment and protect the network, use a security lattice. The risk from any agents are too high.

- (OPTIONAL) Computers used by computers are ideally Linux servers using the distributions’ minimal install with at least some elements of CIS hardening.

But then you loudly proclaim, “we need visibility into the VNS3 (or other) appliances for our <DataDog, Syslog Server, SumoLogic, AlertLogic, etc..>!”

OK – you got us. We DO allow visibility to logs, network configuration and statistics, netflow, and some API calls for system status to plugins that run inside VNS3 – but don’t have full host access.

When you are running VNS3 controllers, whether a single one or a larger cluster, you are running a mini “docker cloud” inside your virtual network. We have a set of pre-built plugins as well as a published set of elements needed to make any open source, proprietary source, or commercial source that can be containerized, into a compliant plugin. This gives you the ability to put analysis and monitoring INTO the network, yet not be able to disrupt the network security device; observability with security.

(And now the sound of Cohesive patting itself on the back for this balanced approach to agenting.)

Cisco owes me a sandwich!

In 2010 we were so excited to meet with a team at Cisco to discuss our network function virtualization offering that had been live in the clouds since 2008.

The folks at Futuriom (@rayno, aka R. Scott Raynovich) have put up a nice piece about Cisco’s struggles to integrate its many acquisitions and fundamentally retain relevance. Now of course Cisco is a behemoth compared to Cohesive Networks, we are a lean and cute marsupial next to it the hulking mammal, but we do compete with them all the time and they do represent the other side of about 50% of all our customer’s connections. (My point being we are quite familiar.)

So, way back when cloud was young – our parent company CohesiveFT developed a very strong champion at Cisco.

He loved what we did with the VNS3 platform (then the VPN-cubed appliance) and was both fascinated and skeptical that via an API you could create any over-the-top network and route traffic through it, indifferent to the actual underlying networks. You could have a flat network anywhere with dynamic routing protocols in place, a form of server motion which separated network location from network identity, thus enabling very flexible and secure application systems. In many ways, unbeknownst even to ourselves, in 2008 we had created the first commercial cloud native NFV (network function virtualization) solution with a restful API.

We ended up invited to Cisco campus in California for what would be a pretty significant (for us) meet up with some of their key technical and business development decision makers about our virtual appliances. We were thrilled and hoped for a partnership to accelerate our business.

The calendar snippet above shows that it was a morning meeting – and maybe I have conflated events through time, as in my memory it was thereafter rescheduled into a longer, later lunch meeting (catering provided) with time for a demo and hopefully some whiteboarding with the team.

The meeting was well attended by our champion, key technical people and decision makers.

We launched into our pitch about how you could build networks anywhere you had access to compute and bulk network ingress/egress. Some questions came and went – and we were thrilled to be talking about the flexibility and security that comes with network virtualization.

At about 20 minutes in, product managers were scribbling away in their notebooks, but then the “biggest” of the technical folks looked up and said “Wait, wait, this is a hardware appliance, right?”

“No, no,” we say, “it’s virtual; all software, really flexible.”

To which he said, “No one will ever put network traffic through software” and he closed his notebook, stood up, and began exiting the room.

With the exception of our champion, everyone else followed.

As we were leaving the room, there was the catering cart outside that had not yet had a chance to come in to our truncated meeting. My memory is sandwiches – but maybe it was breakfast items, regardless it was now NOT on offer, and we were exited from the building.

So, two takeaways, network virtualization was real, driven by software and took Cisco a long time to get their heads around, and I think Cisco owes me a sandwich (or perhaps a croissant).

Migrating to IPv6 – Managing the Madness (Pt. 1)

Names, Names, Names.

Its the year enterprises get focused on moving to Internet 6. We think it is mostly because of the AWS IPv4 address price increase, but as good a reason as any.

While we don’t expect you to start using ALL of the eighteen quintillion, four hundred forty-six quadrillion, seven hundred forty-four trillion, seventy-three billion, seven hundred nine million, five hundred thousand addresses in your cloud VPCs and VNETs, do remember nature abhors a vaccuum.

If you combine the enormity of the address spaces, and the somewhat impossible-to-verbalize representation of addresses, using addresses as a point of reference at the surface of network administration, monitoring, or security is not going to work much longer.

It is time to let loose your “wordsmiths”!

All of those devices running around your organization need a name, and in fact once we start naming things it won’t stop, we will have multiple names per device, because if one name is good, more are even better.

Of course organizations have been using DNS for years, and the Internet runs on it, but for B2B, enterprise, SaaS we have seen widely varying depths of use.

Some of our more advanced customers have adopted one or more taxonomies for naming devices in their production SaaS environments, albeit perhaps not throughout their internal organization. It can be somewhat of the cobbler’s children having no shoes, we do pay attention to our customers, but often not to ourselves. Here is an anonymized example of such a SaaS taxonomy:

<saas service name abbreviation>.<cloud>.<cloud account abbreviation>.<cloud region>.<production status flag>.<customer id>.<customer abbreviation>.<cost center id>.<machine id>.<cloud instance id>.domain

It is certainly a mouthful, and in fact more so than “fc55:636f:6865:7369:7665::6440:1fe3”, but since most humans DON’T do hex/decimal calculations in their heads, it works by providing understanding via the delineated labels in the full name.

Once you see this type of naming at work in administration and monitoring, you can then see why devices end up with multiple DNS names based on differing organizational taxonomies. We have see technical, organizational, asset management, project-based, and functional naming schemes for devices. At Cohesive we aren’t quite that complex, but if you ask Chatgippety or the LLM of your choice for examples they will provide some overly simplistic examples which give you the gist.

- dell.laptop.8gb.intel.corei5.lenovo.windows.10.ssd.departmentC.corporate.com

- marketing.sales.promotions.abrown.laptop.hr.central.branch.corp.com

- asset.laptop.s345678.la.abrown.decommissioned.finance.southern.us.corp.com

- project.gamma.phase3.teamC.abrown.task3.testing.chicago.corp.com

- function.sales.system.crm.module3.midwest.corp.com

Further Reading

If you have all of naming nailed, good on you. If you don’t here is a great overview by the folks at Cloudflare who create some great content for all of us: https://www.cloudflare.com/learning/dns/what-is-dns/.

For those who are more visual, here is excellent work from a redditor who seemed to have become inactive (sadly) three years back:

https://www.reddit.com/r/programming/comments/klaffg/dns_explained_visually_in_10_minutes/.

For more on the emerging challenges of the IPv6 world you can look back at a few Cohesive posts:

- https://cohesive.net/blog/moving-to-ipv6-herein-lies-madness-part-1/

- https://cohesive.net/blog/moving-to-ipv6-herein-lies-madness-part-2/

And if IPv6 addressing still has you perplexed the team at Oracle did a super job here:

https://docs.oracle.com/cd/E18752_01/html/816-4554/ipv6-overview-10.html.

AND – never forget the Rosetta Stone of IPv4 to IPv6 understanding here:

https://docs.google.com/spreadsheets/d/1pIth3KJH1RbQFJvZmZmpBGq6rMMBhqEmfK-ouSNszNY.

Next up we will show you how VNS3 version 6.6 for Internet6 will help you adopt IPv6 for cloud edge and cloud interior, provding network functions that are better, faster, cheaper than the competing offerings (to NAME just a few reasons to get a Cohesive network).

Moving to IPv6 – herein lies madness (Part 2)

This post is short and essential.

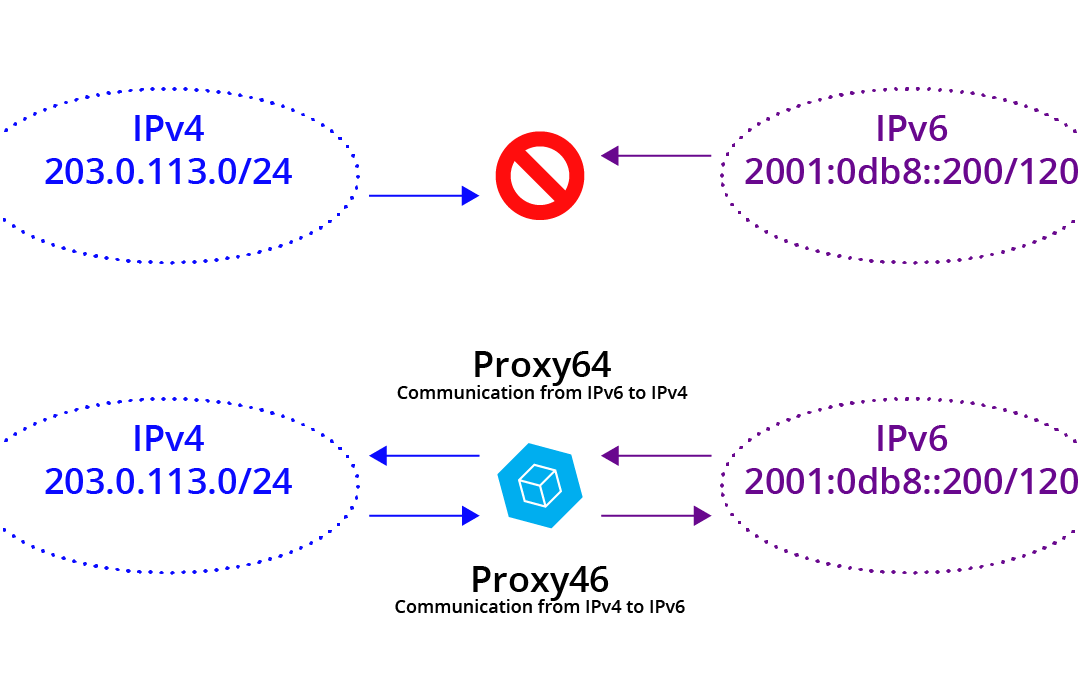

The are two Internets now: the IPv4 Internet and the IPv6 Internet.

The IPv6 Internet is not related to the IPv4 Internet as you have configured it inside, outside, or at the edge of your Enterprise.

IPv6 is not the next version of the Internet protocol; it is the next Internet.

This is the best way to think about it in order to have a more intuitive feel of what is happening underneath it all.

AND – the two Internets never meet. Meaning they are side by side, and applications can move data between them, but in their essence they are different Internets.

Both Internets move across the same layer 2 and physical links, and the protocols above them largely work the same on both, but they are distinct at layer 3, where addressing and naming and routing and firewalling and flow control and many other Internet-y things happen.

A computer may exist on either or both Internets concurrently; an application may bind a network socket to either or both; but they are entirely separate and unrelated.

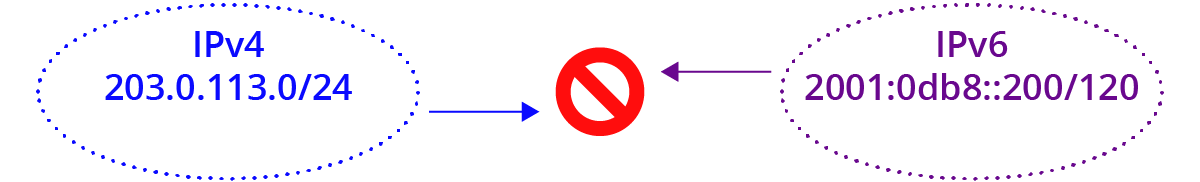

You will hear about NAT64 (and some NAT46) and the implicit description that addresses are being translated from 6-to-4. I am sure that is the name that will “win” in the industry, but this is a place where we will stand apart. We are calling it Proxy64 and Proxy46, as that is a more realistic and familiar description of what is actually happening. It is not address “translation” in the long standing sense, it is packet TRANSMOGRIFICATION* (according to rules which are in some ways “NAT-like.”) It pulls the payload out of an IPv6 packet and creates an entirely new IPv4 packet. (Some concepts don’t even translate well between the two; these cases are handled by different sofware anywhere from “poorly” to “confusingly.”)

Cohesive’s VNS3 6.6.x is moving out into customer’s hands and with it, our Proxy64 and Proxy46 plugins for communicating between the two Internets.

Let us know how we can help your teams get connections up and running at cloud edge using IPv6.

(ps. If you are just coming up to speed on IPv6 here is a good primer from Microsoft. The “producer-centric” nature of IPv6 comes through, but is a good summary of the industry description and belief systems (don’t let the ‘dotnet’ in the URL scare you).

*Yes that is a C&H reference

Moving to IPv6 – herein lies madness (Part 1)

Well, it’s finally happened. It’s the “year of IPv6” for the enterprise.

With this transition will come a significant amount of “cognitive load” for enterprise application and infrastructure administrators.

After a decade or more, it appears there will finally be a broad-based move to Internet Protocol version 6. All it took was for Amazon to announce a price increase for the use of IPv4 (Internet Protocol version 4) addresses.

IPv6 has been being deployed incrementally since 2012 – but for many of us it has been via background adoption by consumer products that we may not even been aware of; cable companies and mobile phone vendors providing the infrastructure to our homes and devices.

For the b2b software and infrastructure technology used by the enterprise, it remains new and novel. For example, Amazon Cloud has supported some IPv6 for a number of years, both dual stack and single stack, whilst Microsoft Azure Cloud only began in earnest in 2023. Both platforms still have significant limitations and fragmentation of support across products.

So if the tech titans are just getting their heads around IPv6, what are us “normies” supposed to do?

At Cohesive Networks, our plan was to start slipping in support over the course of this year, finishing sometime in 2025 for an expected enterprise demand in 2026. Why haven’t we supported to date? Frankly, “no one uses it” – in that of the SaaS, PaaS, and BPaaS companies we support, none of them have asked for it……until the AWS price increase. Our metal-based competitors like Cisco and Palo Alto have supported it for years, but from our broad experience working with our customers, and our customer’s customers, and our customer’s customer’s outsourced network support people, the feature was a tree falling in the forest.

While there is a need to expand the available address pool, there is conflict and confusion between the “producer-centric” intelligence that created IPv6 and its revisions and implementations to date, and the practical needs, the “consumer-centric” needs, of the people just trying to get their jobs done.

There is a disconnect between the simple needs that we satisfy with IPv4; deploy a server, deploy 10 servers, deploy a cluster of a couple hundred servers; and the massive scale of IPv6 which immediately confronts its business consumers.

At Cohesive, we have done a decent job of reducing much of networking and security to “addresses, routes, and rules.” But even for us, helping customers deal with three hundred forty undecillion, two hundred eighty-two decillion, three hundred sixty-six nonillion, nine hundred twenty octillion, nine hundred thirty-seven septillion addresses is a challenge.

Ok, that number above is the whole space, so maybe I am being overly dramatic.

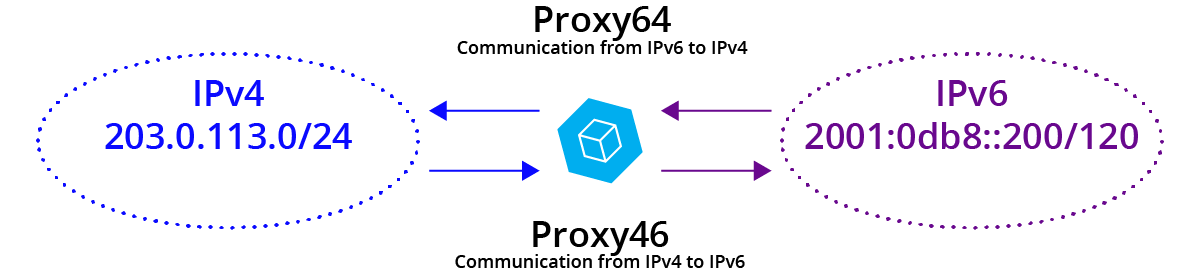

Let’s just get an AWS VPC or Azure VNET with a single IPv6 subnet; that should be easier to deal with.

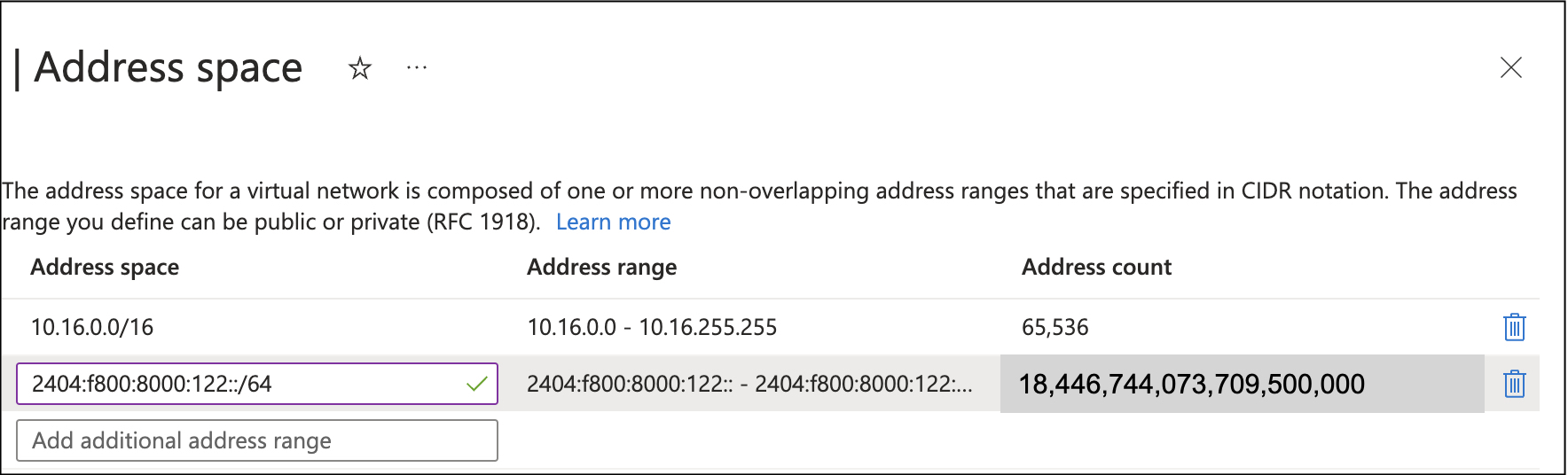

Minimal size is a /64, that sounds better. Oops, that is 18,446,744,073,709,500,000 addresses! (For those of you speaking it aloud, that’s eighteen quintillion, four hundred forty-six quadrillion, seven hundred forty-four trillion, seventy-three billion, seven hundred nine million, five hundred thousand addresses).

And for every virtual network/subnet – another eighteen quintillion.

Now, if I have 10, 100 or 1000 servers to deploy – what does it matter? Just use some addresses and carry on.

True enough, but the fact that they come from a potential space of 1.8 x 10^19 is serious cognitive load that is presented to the infrastructure operators, and it is naive to think this doesn’t come at a cost. As computer memory went from 512k on a “FAT MAC” to 96G on my MacbookPro, I didn’t have to confront the gap between 524,288 bytes and 103,079,215,104 bytes in the normal course of my work.

So what do we do? Well for our part, as we release VNS3 6.6 (with IPv6), we are reducing some of this cognitive load and will be working with our customers to ever-simplify the move to IPv6.

In the meantime, here is a Google Spreadsheet we created for our internal use to help us get our heads around the magnitude of the IPv6 space. Feel free to share: https://docs.google.com/spreadsheets/d/1pIth3KJH1RbQFJvZmZmpBGq6rMMBhqEmfK-ouSNszNY/edit#gid=0

Stand Apart – but be Cohesive

I received a number of congratulations on the LinkedIn platform last month when apparently it let people know I have been with Cohesive Networks for 9 years.

As I reflected on this one thing stands out in relief, and that is Cohesive has always “stood apart” within the markets it serves.

Cohesive Networks exists at the confluence of virtualization, cloud, networking and security. We are of course a part of this commercial market but have not been re-directed by every twist and turn of these markets that have come and gone. Flavor and fashion are often the order of the day, and no doubt we have adopted some of the descriptive labels through time. But the labels have not swayed us from our essential mission, getting customers to, through and across the clouds safely.

If you look at the Gartner hype cycle for cloud networking it provides a gallery of differentiators through time: network observability, container/kubernetes networking, multicloud networking, SASE, SSE, Network-as-a-Service, SD-branch, network automation, network function virtualization, software defined networking, zero trust networks, software-defined WAN, micro-segmentation. If you look at these different facets, the tools, techniques, and knowledge to implement these are not that different. What is different is the packaging and the go-to-market.

Because we have been providing a virtual network platform since the clouds began, we have had customers use us for all of these functions. As a result the good news is we have been less “swayed” by flavor and fashion, which has done well for our existing customer. The bad news is we haven’t always best communicated our value to the potential new customer who may be thinking very much in the context of one of these re-packaged approaches.

Our usual inbound leads are “Hi, I think we need your stuff” or “Hi, we are using a lot of your stuff, can we discuss volume discounts and support plans”.

This communication posture stems from the fact that we have often been ahead of our competitors in either implementation or insight, or both. We were the first virtual network appliance in the EC2 cloud, IBM Cloud, many of the “clouds no-more”, and at later dates among the first network and security appliances in Azure and Google.

Cohesive’s patents are for “user controlled networking in 3rd party computing environments”. It is a subtle distinction – but powerful, between what an infrasturcture administrator, network administrator or hypervisor administrator could do at that time, and the freedom of our users on that infrastructure to create any network they wanted. These are encrypted, virtual networks that their infrastructure providers know nothing about, can not see, nor control. Our users in 2009 and beyond could create any secure, mesh, over-the-top network they wanted without the need or expense of the network incumbents who came before us. These customers find us significantly easier, significantly less expensive and made for the cloud without “metal” roots dragging them back to the ground.

When we showed this capability to investors, partners, infra providers, acquirerers – many of them said “I don’t get it” or “we’ll do better (someday)” or “you have to do all this in hardware” and more. By comparison, the customers who found us through web search, cloud conferences, and cloud marketplaces said “that’s what I need” and a subscription cloud networking company was born.

Looking back, it is shocking that our first offering was “virtual subnets”. It is amazing to think about it, but we sold subnets! At that time any cloud you used put your virtual instances in a very poorly segmented 10.0.0.0/8 subnet of the compute virtualization environment. People wanted to control addresses, routes, and rules, and our encrypted mesh overlay provided it. That was our first product-market fit, and of course that has evolved considerably.

This set us on the path of listening to our customers, and asking ourselves quite fundamentally what a network is, and what is it used for, and by whom?

So while we stood apart, of course we were Cohesive.

Networks are all about standards and interoperability, we just did it our own way.

The feedback from customers was “yes” and “more please”. The feedback from the experts often remained “huh”. So we pressed forward in our own autonomic and somewhat autistic manner, only listening to certain market signals and obsessively working to fulfill a vision of networking and security that says “networks are addresses, routes and rules, everything else is implementation detail the customers should rarely see.”

Now in 2024 and beyond, as the chaotic streams of the changing cloud markets, Internet Protocol v6, and the needs for secure AI and private AI collide, my guess is we will still stand apart while still being Cohesive.

Recent Comments