We’re happy to announce that Cohesive Networks has successfully completed a Type 2 SOC 2 examination. The examination...

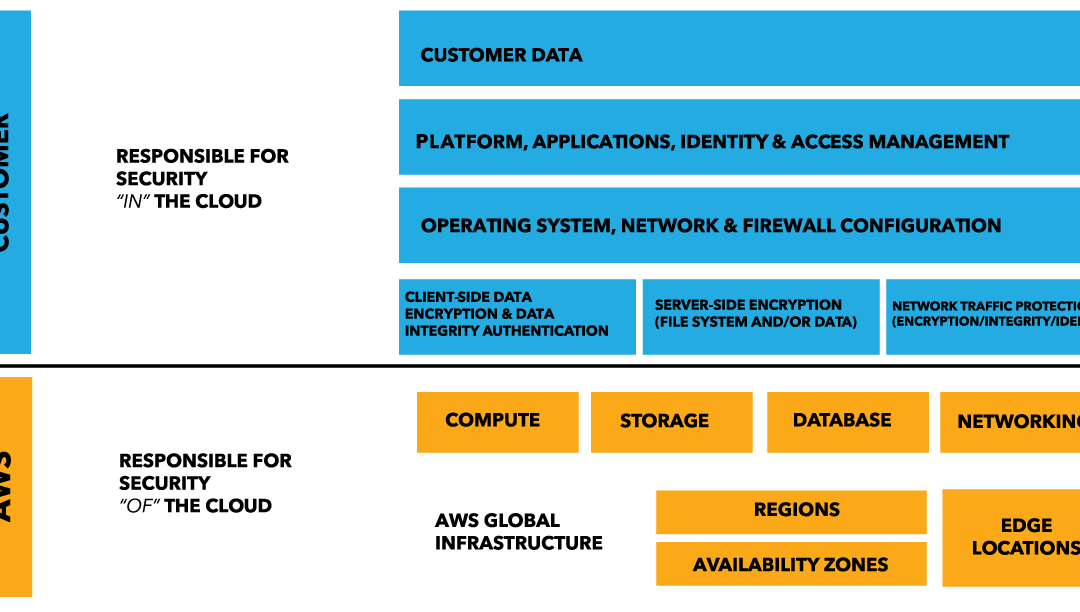

AWS Defense in Depth Overview

Layers of security bolster defenses for any application, database, or critical data. In a traditional data centers, physical network isolation meant building walls for physical security. For cloud, the providers – AWS, Azure, and others, build the walls, fences and comply with things like ITAR and SOC 1. This is the provider-owned and completely provider-controlled security they provide to users.

Up the cloud stack, users can add and more layers of defense at the virtualization layer by creating logical segmentation, and at the application layer with application segmentation. Three key ways to add network security users can access provider-owned, user-controller features like virtual private clouds (aka VLAN isolation), port filtering, and static assignable public IP addresses.

AWS allow users to control certain features and services, but ultimately own the feature. The cloud user is responsible for setting up, maintaining and updating these features. One example is port filtering on the host operating system. Port filtering prevents packets from ever reaching a virtual adapter. hypervisor firewall through network mechanisms such as security groups or configuration files. Users can limit rules to only allow ports needed for each application.

AWS Defense in Depth

AWS is responsible for security of the cloud. AWS users are responsible for security in the cloud.

Customer data and applications are completely controlled by AWS users. AWS provides security features including IAM, firewalls, port filtering (security groups), and network protection but users must enable, maintain and control those features.

AWS provider-owned/User Controlled Security

Identity and Access Management (IAM)

In AWS, the identity and access management (IAM) service allows users to create specific accounts for each person/role that needs AWS access.

In a new AWS account, the initial account is the “root account” with full access all services and controls in the account. After configuring the administrator roles and access you should shift all administrative activities in the console to assigned roles. Before deleting the root access key, you can first deactivate it to test for any issues. You can next delete the root account and root access key to prevent any outside access.

Force MFA for all AWS users

From the IAM console, you can add multi-factor authentication (MFA) for all users. First, enable MFA on the root account. Next, you can require all AWS users to configure MFA. The “force MFA” IAM policy is attached to each user. Note that once you enable “force MFA” the user will be denied all other permissions until the he/she sets up MFA and logs in using MFA.

Use IAM roles for all services

AWS IAM allows you to create roles to give users or AWS infrastructure the necessary permissions to access other AWS services. For example, roles in EC2 roles can limit which users can launch an instance and which S3 permissions can interact with EC2.

AWS Key Management Service

AWS Key Management Service (KMS) is a service for creating and controlling encryption keys. KMS uses Hardware Security Modules (HSMs) to protect keys in AWS.

CloudTrail

CloudTrail is an AWS service that records API calls for you account and delivers log files. CloudTrail is not enabled by default. CloudTrail provides a history of AWS API calls for your account., including API calls made via the Management Console, SDKs, command line tools, and high-level AWS services. CloudTrail API call history enables security analysis, resource change tracking, and compliance auditing.

AWS Config

AWS Config is a managed service that creates a resource inventory, configuration history, and configuration change notifications for security and governance. AWS Config lets you export a complete inventory of your AWS resources with all configuration details. AWS Config helps enable compliance auditing, security analysis, and resource change tracking.

AWS Trusted Advisor

AWS Trusted Advisor inspects the AWS environment and finds opportunities to save money, improve system performance and reliability, or help close security gaps.

Amazon Inspector

Amazon Inspector is an automated security assessment service that can assess applications for vulnerabilities or deviations from best practices. Amazon Inspector includes a knowledge base of hundreds of rules mapped to common security compliance standards (e.g., PCI DSS) and vulnerability definitions.

AWS Networking Security

Security Groups = act as firewalls for inbound and outbound traffic to/from your EC2-VPC devices. Security group characteristics include:

- By default, outbound traffic is allowed

- Rules are permissive (you can’t deny access)

- Add / remove rules at any time

- You can copy the rules from an existing security group to a new security group

- Security groups are stateful — if you send a request from your instance, the response traffic for that request is allowed to flow in regardless of inbound security group rules

To create a security group rule, specify the following:

- The protocol to allow (such as TCP, UDP, or ICMP)

- For TCP, UDP, or a custom protocol: The range of ports to allow

- For ICMP: The ICMP type and code

- Choose one of the following options for the source (inbound rules) or destination (outbound rules):

- An individual IP address, in CIDR notation ( 203.0.113./32)

- An IP address range, in CIDR notation (for example, 203.0.113.0/24)

- a name or ID of a security group – allow instances associated with the specified security group to access instances associated with this security group

Network access control lists (ACLs) = act as a firewall for associated subnets, controlling both inbound and outbound traffic at the subnet level.

The following are the parts of a network ACL rule:

- Rule number. Rules are evaluated starting with the lowest numbered rule. As soon as a rule matches traffic, it’s applied regardless of any higher-numbered rule that may contradict it.

- Protocol. You can specify any protocol that has a standard protocol number. For more information, see Protocol Numbers. If you specify ICMP as the protocol, you can specify any or all of the ICMP types and codes.

- [Inbound rules only] The source of the traffic (CIDR range) and the destination (listening) port or port range.

- [Outbound rules only] The destination for the traffic (CIDR range) and the destination port or port range.

- Choice of ALLOW or DENY for the specified traffic.

* NOTE: ACLs are similar to Security Groups (rules), but ACLs monitor traffic at the subnet level. It’s important to note that Security Groups are Stateful, while the NACL is Stateless. *

Elastic IP address (EIP) = static IP address associated with your AWS account. Use EIPs to mask the failure of an instance or software by rapidly remapping the address to another instance in your account.

An Elastic IP address is a public IP address, reachable from the Internet. If your instance does not have a public IP address, you can associate an Elastic IP address with your instance to enable communication with the Internet.

When you associate an Elastic IP address with an instance in EC2-Classic, a default VPC, or an instance in a nondefault VPC in which you assigned a public IP to the eth0 network interface during launch, the instance’s current public IP address is released back into the public IP address pool. If you disassociate an Elastic IP address from the instance, the instance is automatically assigned a new public IP address within a few minutes.

Further Reading: 10 AWS security blunders and how to avoid them. By Fahmida Y. Rashid Originally published on InfoWorld Nov 3, 2016

Next up, use your user-provided, user-owned features to add application layer security.

Services like SSL/TLS termination, load balancing, caching, proxies, and reverse proxies can also add application-layer security. Additionally, tailoring security policies to each application can be more effective than applying complex, blanket security policies across multiple applications.

Recent Comments